Once upon a time, in the late 1940s, three partners, John McCarthy, Marvin Minsky, and Claude Shannon, came together to embark on a mission that would change the course of history forever. They sought to create a new field of study that would combine computer science and cognitive science, and they called it artificial intelligence (AI).

Artificial intelligence, or simply AI, is a layered architecture of network nodes (or connection points). Each connection point has the ability to receive input and transfer output to other nodes in the network, building an artificial neural network that closely resembles the human brain. AI has become a buzzword in recent years, but its history dates back to the mid-20th century. In this article, we will explore the timeline of AI and its evolution into the modern era.

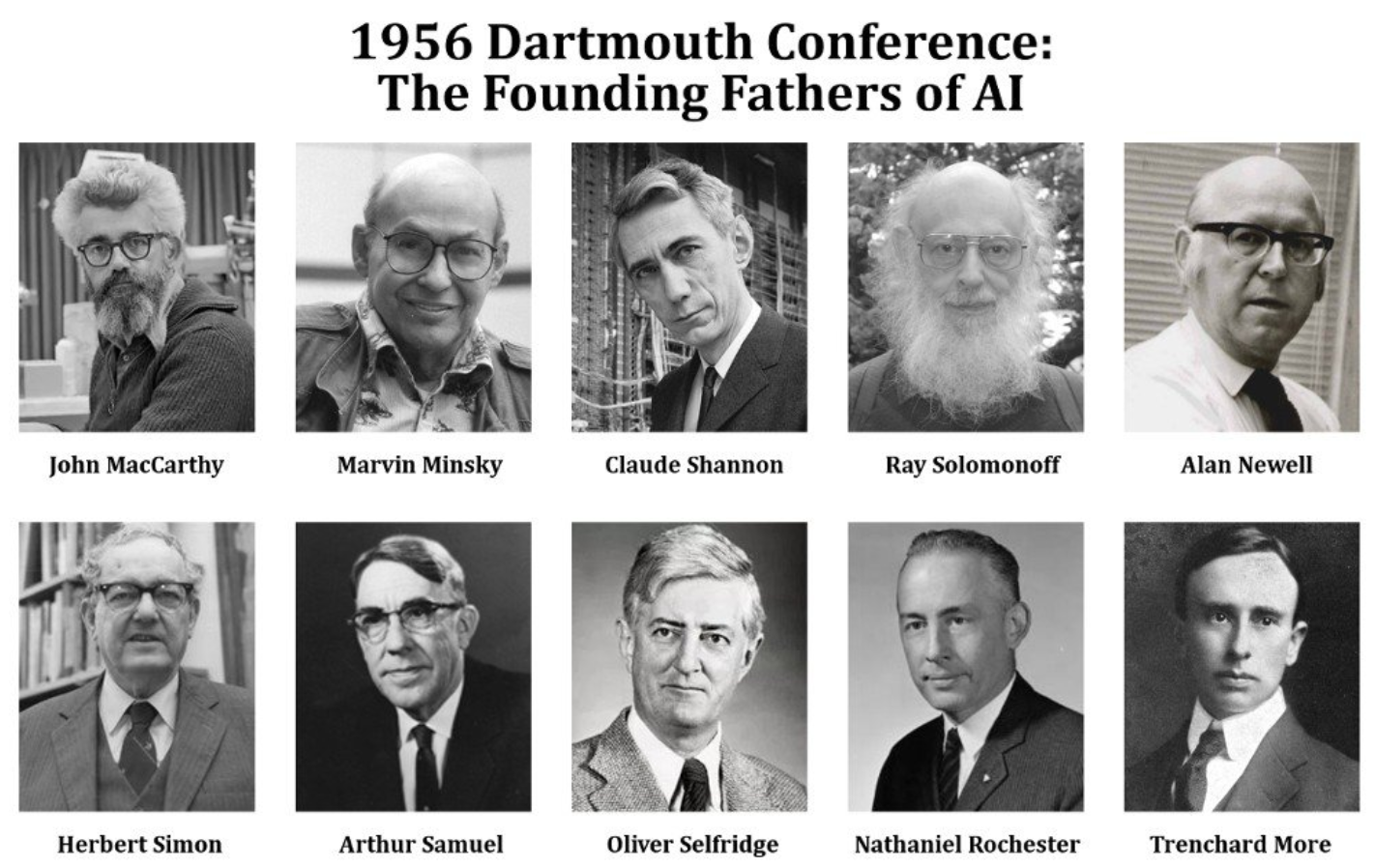

The concept of building machines with human-like cognitive abilities was initially investigated by computer scientists in the 1950s, which is when artificial intelligence began to emerge. The Dartmouth Conference, widely recognized as the origin of AI, was founded in 1956 by the pioneers of AI, John McCarthy, Marvin Minsky, and Claude Shannon.

Fun Fact: On the anniversary of AI's birth, over 100 scholars and researchers convened at Dartmouth for AI@50. The conference celebrated the past, evaluated current progress, and generated new ideas for future research. McCarthy proposed the concept of "artificial intelligence," which later became the field's central tenet, at this conference. AI aimed to build machines that could carry out tasks that would typically need human intelligence, like comprehending natural language, spotting patterns, and making data-driven decisions.

The most noteworthy advancement of AI in healthcare was The ELIZA chatbot, developed by computer scientist, Joseph Weizenbaum, during the 1960s. ELIZA was a computer software developed in 1966 that simulated a discussion with a therapist using basic natural language processing methods. By rephrasing the user's input as questions and reflecting back the user's feelings, ELIZA was able to give the sense of human-like interaction despite having just rudimentary capability. It was notable for having piqued the public's interest in AI and inspired researchers to look into new directions for advancement.

In the early 1970s, the idea of using AI to improve medical diagnosis was still in its infancy. Dr. Edward Shortliffe was a young physician and computer scientist who was fascinated by the idea of developing an expert system that could help physicians diagnose bacterial infections, a significant cause of morbidity and mortality at the time.

Dr. Shortliffe, along with a team of computer scientists and physicians, set out to develop a prototype of an expert system called MYCIN. The MYCIN system was designed to ask physicians a series of questions about a patient's symptoms and medical history to provide a list of possible diagnoses and recommend treatments based on the input.

After several months of development, the team tested the MYCIN system on a group of patients with bacterial infections. The results were astounding! MYCIN was able to correctly diagnose infections in over 90% of cases, outperforming human physicians in some cases. The development of MYCIN demonstrated the potential of AI to assist physicians in making more accurate diagnoses and improving patient outcomes.

The success of MYCIN was a significant milestone in the early history of AI in healthcare. Dr. Shortliffe's work opened up new possibilities for the application of AI in medical diagnosis and paved the way for future developments in the field of expert systems.

The use of AI in military applications dates back to at least the 1980s, with the development of "smart" weapons that were able to make some level of autonomous decisions.

The Strategic Computing Initiative (SCI) launched in 1983 marked a major milestone in AI and computing technologies. Driven by a desire to maintain America's technological edge in computing for national security, the SCI focused on areas such as speech recognition and natural language processing. The research and development laid the foundation for many advanced AI technologies we use today. Though rumors speculate on secret activities, there is no concrete evidence. What is clear is the SCI played a critical role in advancing AI technologies, cementing America’s position as a leader in the field. Today, we continue to build on the SCI legacy to explore the limitless potential of AI and advanced technologies.

Despite developments in healthcare and military sectors, the development of AI was stunted during the 1970s and 1980s, and it entered an era known as the "AI Winter" coined to depict the time of inertia and disillusionment in the field. The dearth of advancement during this time period was due to researchers' struggles to solve technical problems and create useful AI applications.

Funding for AI research dried up at the time because of sluggish, heavy, and expensive computers and data storage. Many researchers left the field to work in other branches of computer science. Professor Marvin Minsky, a leading figure in AI, remained undaunted. In a statement that has become legendary, Minsky believed that the winter of AI is upon us; however he also knew that AI will rise again, as it always does!

In the early 1990s, the field of artificial intelligence was still struggling to recover from the AI winter. Progress was slow, and funding was scarce. A group of researchers at Carnegie Mellon University were about to make a breakthrough that would go down in books as the revival of Artificial Intelligence.

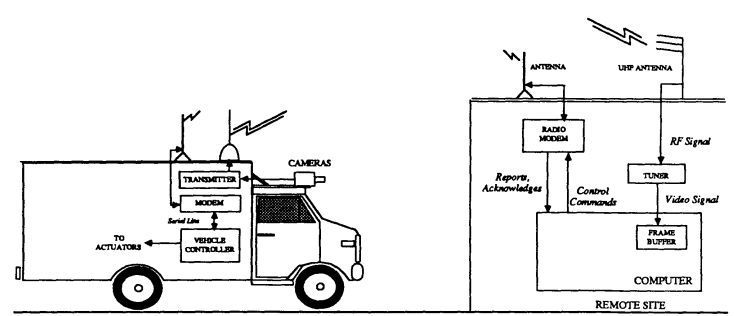

Their project was called "NavLab," and it involved developing an AI-powered car that could drive autonomously. The NavLab project at Carnegie Mellon University was met with scepticism and doubt. The idea of developing an AI-powered self-driving car seemed almost impossible, but the team refused to give up. After months of collecting data and refining their algorithms, the NavLab car was ready for its first real-world test drive. The team was on the edge of their seats as the car pulled out onto the road, and to their amazement, it performed flawlessly. The NavLab project proved that with perseverance and innovation, even the most seemingly impossible challenges can be overcome.

It was a true comeback story for AI, sparking a new era of development in the field. Today, autonomous vehicles are on the cusp of becoming a reality, with companies like Tesla and Waymo leading the charge.

AI has been used in education for several decades, with the earliest instances dating back to the 1980s. In 1984, the Intelligent Tutoring System (ITS) was developed at Carnegie Mellon University, which used AI to personalize the learning experience for students based on their individual needs and progress. The system was used to teach computer programming and was successful in improving student performance.

In 1990s, AI-powered educational software continued to be developed, with the introduction of systems like AutoTutor, which uses natural language processing to simulate a conversation between a student and a tutor. Another example is the Adaptive Learning Environments Model (ALEM), which used AI to analyze student data and adapt the learning content accordingly.

AI in healthcare has made significant strides since the early 2000s, revolutionizing the industry in many ways. One major development that has had a significant impact is the emergence of machine learning techniques, which have helped to automate and improve medical diagnosis and treatment.

Machine learning algorithms can analyze vast amounts of data and identify patterns that are difficult for humans to detect, allowing doctors to make more accurate diagnoses and develop more effective treatment plans. For example, in 2019, researchers at Stanford University developed an AI algorithm that could detect pneumonia on chest X-rays with a higher accuracy rate than human radiologists. Another major development in AI and healthcare has been the rise of digital health technologies. These technologies, which include wearables, mobile apps, and other connected devices, have allowed patients to take a more active role in their own healthcare and enabled doctors to remotely monitor patients and provide more personalized care.

For instance, in 2015, the FDA approved the first mobile app that could be used to manage insulin dosing in patients with diabetes. The app used AI algorithms to analyze a patient's blood sugar levels, food intake, and other factors to provide personalized insulin dose recommendations in real-time.

The 21st century has seen significant advancements of AI in warfare, with major developments that have the potential to fundamentally change the nature of conflict. One major development has been the rise of unmanned aerial vehicles (UAVs), or drones, that use AI algorithms to carry out a variety of military operations.

AI-powered drones have been used for everything from reconnaissance and surveillance to precision strikes on enemy targets. For example, in 2011, the US military used a drone equipped with AI technology to carry out a targeted strike that killed Anwar al-Awlaki, an American-born al-Qaeda leader who had been living in Yemen.

Another major development in AI and warfare has been the emergence of autonomous weapons systems, or "killer robots," which can operate without human intervention. These weapons systems use AI algorithms to make decisions about when and where to engage targets, raising ethical concerns about the potential for unintended harm to civilians.

In 2017, over 100 countries gathered at the United Nations to discuss the potential risks of autonomous weapons systems and consider a ban on their development and use. While progress has been made in regulating these technologies, concerns remain about their impact on the future of warfare.

Mark Esper, a former US secretary of Defence, has contrasted this by highlighting the significance of integrating AI into military operations. Esper argued in a speech in 2020 that "AI and machine learning will enable us to maintain our competitive edge and enhance our lethality" and that AI is "an enabler of other emerging technologies, such as autonomy and hypersonics."

The rise of AI has been a story of perseverance as scientists and engineers worked to break through the boundaries of what is achievable. The field has come a long way from the early AI pioneers to the contemporary era of intelligent machines, and we may anticipate seeing even more fascinating advances in the future. AI is not just about developing intelligent machines – it is about using technology to make a positive impact on the world around us. To quote Andrew Ng, Founder of Google Brain and former Chief Scientist at Baidu, "The potential of AI is limitless, and the technology will become an essential part of our daily lives, augmenting our capabilities and providing new solutions to complex problems."

To conclude, AI has become a crucial element for building a sustainable future, and its integration into various industries and personal activities has been growing rapidly. It's high time we embrace the potential of AI and explore its applications, read more on how it can help in making quicker decisions.